Introducing ROMA (Recursive Open Meta-Agent): an open-source meta-agent framework for building high-performance multi-agent systems. ROMA orchestrates simpler agents and tools to solve complex problems. At its core, ROMA provides a structure for multi-agent systems: a hierarchical, recursive task tree where parent nodes break a complex goal into subtasks, pass them down to their child nodes as context, and later aggregate their solutions in the context as results flow back up. By dictating this structure of context flow, ROMA makes it straightforward to build agents that reliably handle medium- to long-horizon tasks requiring many steps.

For example, you might want an agent to write you a report on the climate differences between LA and New York. A parent node could break this into specific subtasks: subtask 1 researches LA’s climate, subtask 2 researches NYC’s climate, utilizing specialized agents and tools like an AI search model or weather APIs. Once both subtasks are complete, the parent node creates a final comparison task that analyzes the climate differences between the two cities, then aggregates these results into a comprehensive report.

ROMA makes building high-performance multi-agent systems straightforward. With structured Pydantic inputs and outputs, the flow of context is transparent and fully traceable. Builders can see exactly how reasoning unfolds, enabling easy debugging, prompt refinement, and agent swapping. This transparency enables fast iteration in context engineering, unlike black-box systems. Furthermore, ROMA’s modular design means you can plug in any agent, tool, or model at the node level, from specialized LLM-based agents to human-in-the-loop checkpoints. Furthermore, the tree-based structure naturally encourages parallelization, delivering both flexibility and high performance for large, demanding problems.

To demonstrate the framework’s efficacy, we built ROMA Search, an internet search agent that leverages ROMA’s architecture rather than utilizing domain-specific optimizations. On the challenging subset of SEALQA benchmark known as Seal-0 – which tests complex, multi-source reasoning – ROMA Search achieves 45.6% accuracy, making it the state-of-the-art system. This beats the previous best performer, Kimi Researcher, at 36% accuracy, and more than doubles Gemini 2.5 Pro’s performance at 19.8%. Among open-source models, ROMA Search significantly outperforms the next best system, Open Deep Search (also created by Sentient), which achieves 8.9% accuracy.

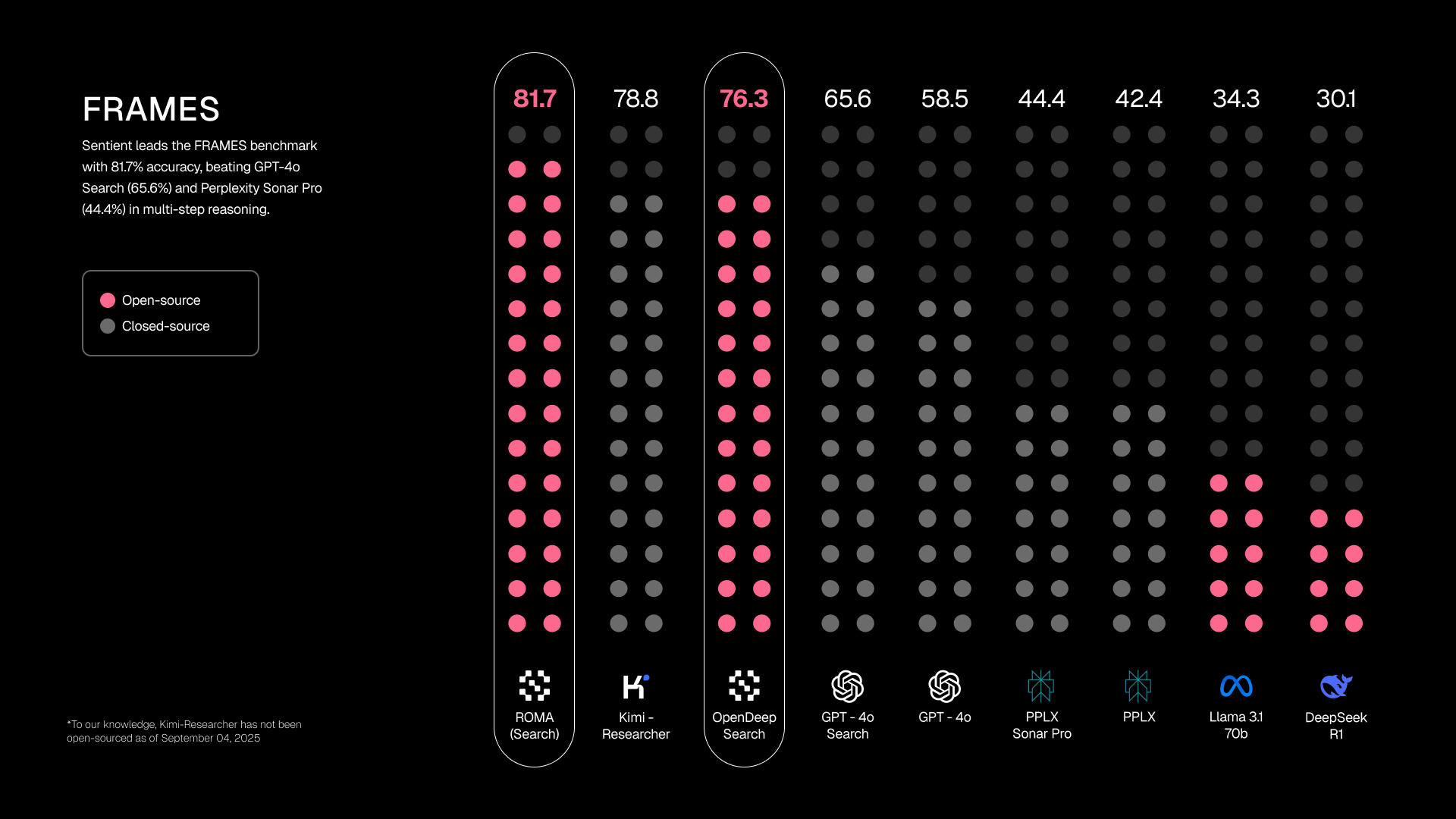

ROMA Search achieved state-of-the-art performance on FRAMES (multi-step reasoning) and near state-of-the-art results on SimpleQA (factual knowledge retrieval), demonstrating the system’s effectiveness across different types of search challenges.

Most importantly, ROMA is open-source and extensible by design. Search is just the beginning: anyone can plug in new agents, extend the framework with custom tools, or adapt it for domains ranging from financial analysis to creative content generation. ROMA provides the backbone – the real breakthroughs will come from what the community builds on top of it.

Why Long-Horizon Tasks Break Agents

AI has made impressive strides on single-step tasks. Ask a model to summarize an article, write a short email, or solve a math problem, and it will often succeed. There remains, however, the challenge of building agents that perform well on long-horizon tasks – tasks that require many steps or reasoning or action to reach the goal.

The problem is that errors compound. An AI might be 99% reliable at any one step, but string ten steps together, and the chance of success drops dramatically. A single hallucination, a misapplied instruction, or a lost piece of context can derail the entire process. This fragility makes it especially hard for agents to handle tasks that span multiple subtasks and require reasoning across sources.

Addressing this fragility requires solving two tightly coupled challenges:

- The meta-challenge (architecture): how do we design agent systems that reliably execute long-horizon reasoning despite compounding errors?

- The task-specific challenge (instantiation): Given a concrete goal, which decomposition, tools, models, prompts, and verification steps make this particular agent robust and accurate?

Search is a great case study because it stresses both challenges at once. It’s inherently multi-step (retrieve → read → extract → cross-check → synthesize) and tightly bound to up-to-date, real-world knowledge. Consider the question, “How many movies with an estimated net budget of $350 million or more were not the highest-grossing film of their release year?” To answer this, an agent must:

- Break the query into parts (find expensive films, find the highest-grossing films across years).

- Gather fresh data from multiple sources.

- Reason about the results to satisfy the query logic.

- Synthesize a clean, final answer.

Even with this relatively simple query, there are many failure points: models may hallucinate, misalign seasons, or loop inefficiently. To make matters worse, traditional agent frameworks often hide their internal reasoning, making it hard to improve or adapt them.

If we solve the meta-challenge with a solid meta-agent architecture, the task-specific challenge reduces to making smart instantiation choices: selecting the right tools and agents, crafting effective prompts, and adding targeted human checks. When the architecture also provides transparency into how context flows between steps, those choices become far easier to refine and improve. That’s exactly the role ROMA plays.

ROMA’s Architecture: From Goals to Results

ROMA addresses the long-horizon challenge by providing a recursive, hierarchical structure for agent systems. Every task is represented as a node, which can either execute directly, break itself down into subtasks, or aggregate the results of its children. This tree-like structure makes the flow of context explicit, traceable, and easy to refine. With this backbone in place, building robust agents becomes a matter of choosing the right tools, prompts, or verification strategies for each node.

Let’s walk through how ROMA Search would tackle the above example. Note that each of the node types (Atomizer, Planner, Executor, Aggregator) are shared across ROMA. The prompts, agents, and tools at each step are specific to ROMA Search.

1: Atomizer – assess the task.

The process begins with the main goal node. ROMA’s Atomizer step determines whether the task is simple enough for a single agent to complete, or whether it needs to be broken down.

2: Planner – decompose into subtasks.

Here, the goal is complex, so the node becomes a Planner. It splits the goal into simpler pieces:

- Search for films with an estimated net production budget of at least $350 million and record their titles, budgets, and release years.

- Search for the worldwide highest-grossing film for each release year covered by the highest-budget films list (2000-present) and capture title and gross earnings.

- Analyze the gathered data to compile the list of desired movies.

Each subtask becomes a child Atomizer node. Note that these children are sequentially dependent (later subtasks rely on earlier outputs). ROMA generates a tree of tasks, and siblings may be dependent or independent, giving a clear structure for context engineering while preserving flexibility.

3: Executors – perform the subtasks.

Once the Atomizer decides a subtask is simple enough to execute directly, the node becomes an Executor. Executors call the appropriate tools/agents (e.g., search APIs, extraction models), then pass their outputs to the next dependent subtask. The final subtask returns its result to the parent.

4: Aggregators – combine results.

Once all Executors finish, the parent node becomes an Aggregator. It collects child outputs, verifies consistency, and synthesizes the final answer – in this case, a clean list of the movies which satisfy the query’s constraints.

Human-in-the-Loop & Stage Tracing

At any node, a human can verify facts or add context—especially useful for very long-horizon tasks where hallucinations or gaps are likely. After planning, ROMA can also ask the user to confirm the subtasks, catching misunderstandings early. Even without human intervention, stage tracing (viewing inputs/outputs at every node) provides the transparency and control needed to diagnose errors and iterate quickly.

Scaling Further

We kept the example to a single subtask layer to show how nodes behave. In practice, ROMA scales to many layers of recursion for complex goals, forming deep task trees. When sibling nodes are independent, ROMA executes them in parallel, so large jobs with hundreds or thousands of nodes remain fast.

Ready to build the future of AI agents?

ROMA Search is just the beginning. We’ve made it open-source and extensible so you can push the boundaries of what’s possible.

- For Builders: Start experimenting with building agents in ROMA. Swap in different agents, test multi-modal capabilities, or customize prompts to create agents that can create anything from creative content like comics and podcasts to analytical work like research reports.

- For Researchers: Advance the field by building on ROMA’s foundation. Our transparent stage tracing gives you insights into agent interactions and context flow – perfect for developing next-generation meta-agent architectures.

While proprietary systems advance at the pace of a single company, ROMA evolves with the entire community’s collective efforts. Get started with ROMA today:

- GitHub Repo: https://github.com/sentient-agi/ROMA

- Video Presentation: https://youtu.be/ghoYOq1bSE4?feature=shared

References

¹ https://arxiv.org/pdf/2506.01062

² https://moonshotai.github.io/Kimi-Researcher/

³ https://arxiv.org/pdf/2409.12941

⁴ https://openai.com/index/introducing-simpleqa/